This edition applies to the version 3, release 0 of the IBM Software Development Kit for Multicore Acceleration (Product number 5724-S84) and to all subsequent releases and modifications until otherwise indicated in new editions.

This edition replaces SC33-8325-01.

The IBM Software Development Kit for Multicore Acceleration Version 3.0 (SDK 3.0) is a complete package of tools to enable you to program applications for the Cell Broadband Engine(TM) (Cell BE) processor. The SDK 3.0 is composed of development tool chains, software libraries and sample source files, a system simulator, and a Linux(R) kernel, all of which fully support the capabilities of the Cell BE.

This book describes how to use the SDK 3.0 to write applications. How to install SDK 3.0 is described in a separate manual, Software Development Kit for Multicore Acceleration Version 3.0 Installation Guide, and there is also a programming tutorial to help get you started.

Each section of this book covers a different topic:

This book includes information about the new functionality delivered with the SDK 3.0, and completely replaces the previous version of this book. This new information includes:

For information about differences between SDK 3.0 and previous versions, see Appendix A. Changes to SDK for this release.

Cell BE applications can be developed on the following platforms:

The supported languages are:

This publication contains documentation that may be applied to certain environments on an "as-is" basis. Those environments are not supported by IBM, but wherever possible, workarounds to problems are provided in the respective forums.

The SDK 3.0 is available through Passport Advantage(R) with full support at:

http://www.ibm.com/software/passportadvantage

You can locate documentation and other resources on the World Wide Web. Refer to the following Web sites:

http://www.ibm.com/bladecenter/For service information, select Support.

http://www.ibm.com/developerworks/power/cell/To access the Cell BE forum on developerWorks, select Community.

http://www.bsc.es/projects/deepcomputing/linuxoncell

http://www.gnu.org/software/gdb/gdb.html

This version (SDK 3.0) of the SDK supersedes all previous versions of the SDK.

For a list of documentation referenced in this Programmer's Guide, see Appendix B. Related documentation.

This section describes the contents of the SDK 3.0, where it is installed on the system, and how the various components work together. It covers the following topics:

The GNU tool chain contains the GCC C-language compiler (GCC compiler) for the PPU and the SPU. For the PPU it is a replacement for the native GCC compiler on PowerPC (PPC) platforms and it is a cross-compiler on X86. The GCC compiler for the PPU is the default and the Makefiles are configured to use it when building the libraries and samples.

The GCC compiler also contains a separate SPE cross-compiler that supports the standards defined in the following documents:

The associated assembler and linker additionally support the SPU Assembly Language Specification V1.6. The assembler and linker are common to both the GCC compiler and the IBM XL C/C++ compiler.

GDB support is provided for both PPU and SPU debugging, and the debugger client can be in the same process or a remote process. GDB also supports combined (PPU and SPU) debugging.

On a non-PPC system, the install directory for the GNU tool chain is /opt/cell/toolchain. There is a single bin subdirectory, which contains both PPU and SPU tools.

On a PPC64 or BladeCenter QS21, both tool chains are installed into /usr. See System root directories for further information.

IBM XL C/C++ for Multicore Acceleration for Linux is an advanced, high-performance cross-compiler that is tuned for the CBEA. The XL C/C++ compiler, which is hosted on an x86, IBM PowerPC technology-based system, or a BladeCenter QS21, generates code for the PPU or SPU. The compiler requires the GCC toolchain for the CBEA, which provides tools for cross-assembling and cross-linking applications for both the PPE and SPE.

IBM XL C/C++ supports the revised 2003 International C++ Standard ISO/IEC 14882:2003(E), Programming Languages -- C++ and the ISO/IEC 9899:1999, Programming Languages -- C standard, also known as C99. The compiler also supports:

The XL C/C++ compiler available for the SDK 3.0 supports the languages extensions as specified in the IBM XL C/C++ Advanced Edition for Multicore Acceleration for Linux V9.0 Language Reference.

The XL compiler also contains a separate SPE cross-compiler that supports the standards defined in the following documents:

For information about the XL C/C++ compiler invocation commands and a complete list of options, refer to the IBM XL C/C++ Advanced Edition for Multicore Acceleration for Linux V9.0 Compiler Reference.

Program optimization is described in IBM XL C/C++ Advanced Edition for Multicore Acceleration for Linux V9.0 Programming Guide.

The XL C/C++ for Multicore Acceleration for Linux compiler is installed into the/opt/ibmcmp/xlc/cbe/<compiler version number> directory. Documentation is located on the following Web site:

http://publib.boulder.ibm.com/infocenter/cellcomp/v9v111/index.jsp

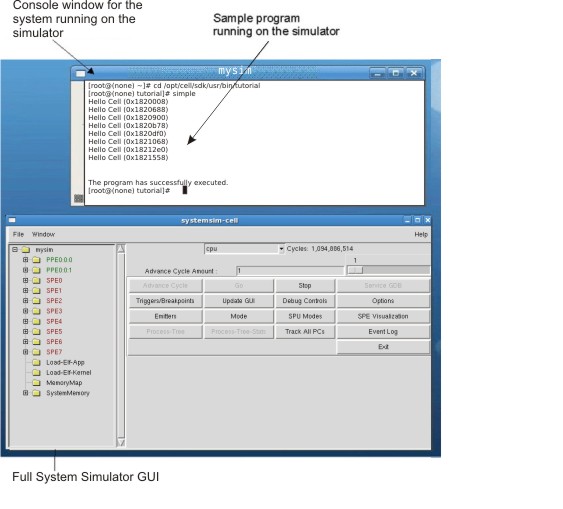

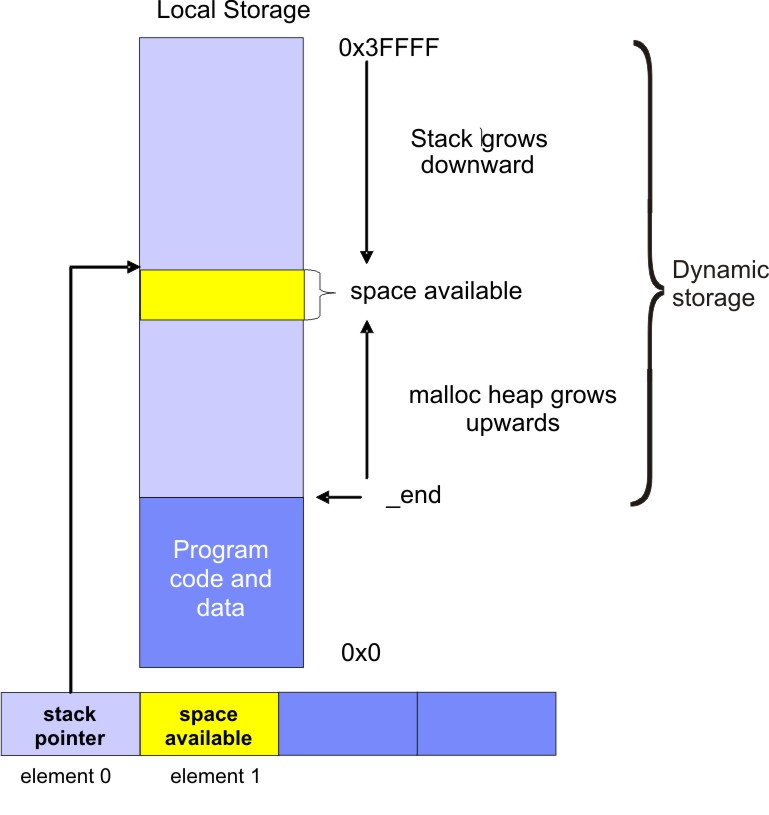

The IBM Full-System Simulator (referred to as the simulator in this document) is a software application that emulates the behavior of a full system that contains a Cell BE processor. You can start a Linux operating system on the simulator and run applications on the simulated operating system. The simulator also supports the loading and running of statically-linked executable programs and standalone tests without an underlying operating system.

The simulator infrastructure is designed for modeling processor and system-level architecture at levels of abstraction, which vary from functional to performance simulation models with a number of hybrid fidelity points in between:

This simulation model is useful for software development and debugging when a precise measure of execution time is not significant. Functional simulation proceeds much more rapidly than performance simulation, and so is also useful for fast-forwarding to a specific point of interest.

Operation latencies are modeled dynamically to account for both processing time and resource constraints. Performance simulation models have been correlated against hardware or other references to acceptable levels of tolerance.

The simulator for the Cell BE processor provides a cycle-accurate SPU core model that can be used for performance analysis of computationally-intense applications. The simulator for SDK 3.0 provides additional support for performance simulation. This is described in the IBM Full-System Simulator Users Guide.

The simulator can also be configured to fast-forward the simulation, using a functional model, to a specific point of interest in the application and to switch to a timing-accurate mode to conduct performance studies. This means that various types of operational details can be gathered to help you understand real-world hardware and software systems.

See the /opt/ibm/systemsim-cell/doc subdirectory for complete documentation including the simulator user's guide. The prerelease name of the simulator is "Mambo" and this name may appear in some of the documentation.

The simulator for the Cell BE processor is also available as an independent technology at

http://www.alphaworks.ibm.com/tech/cellsystemsim

The system root image for the simulator is a file that contains a disk image of Fedora 7 files, libraries, and binaries that can be used within the system simulator. This disk image file is preloaded with a full range of Fedora 7 utilities and also includes all of the Cell BE Linux support libraries described in Performance support libraries and utilities.

This RPM file is the largest of the RPM files and when it is installed, it takes up to 1.6 GB on the host server's hard disk. See also System root directories.

The system root image for the simulator must be located either in the current directory when you start the simulator or the default /opt/ibm/systemsim-cell/images/cell directory. The cellsdk script automatically puts the system root image into the default directory.

You can mount the system root image to see what it contains. Assuming a mount point of /mnt/cell-sdk-sysroot, which is the mount point used by the cellsdk_sync_simulator script, the command to mount the system root image is:

mount -o loop /opt/ibm/systemsim-cell/images/cell/sysroot_disk /mnt/cell-sdk-sysroot/

The command to unmount the image is:

umount /mnt/cell-sdk-sysroot/

Do not attempt to mount the image on the host system while the simulator is running. You should always unmount the system root image before you start the simulator. You should not mount the system root image to the same point as the root on the host server because the system can become corrupted and fail to boot.

You can change files on the system root image disk in the following ways:

For the BladeCenter QS21, the kernel is installed into the /boot directory, yaboot.conf is modified and a reboot is required to activate this kernel. The cellsdk install task is documented in the SDK 3.0 Installation Guide.

The following libraries are described in this section:

The SPE Runtime Management Library (libspe) constitutes the standardized low-level application programming interface (API) for application access to the Cell BE SPEs. This library provides an API to manage SPEs that is neutral with respect to the underlying operating system and its methods. Implementations of this library can provide additional functionality that allows for access to operating system or implementation-dependent aspects of SPE runtime management. These capabilities are not subject to standardization and their use may lead to non-portable code and dependencies on certain implemented versions of the library.

The elfspe is a PPE program that allows an SPE program to run directly from a Linux command prompt without needing a PPE application to create an SPE thread and wait for it to complete.

For the BladeCenter QS21, the SDK installs the libspe headers, libraries, and binaries into the /usr directory and the standalone SPE executive, elfspe, is registered with the kernel during boot by commands added to /etc/rc.d/init.d using the binfmt_misc facility.

For the simulator, the libspe and elfspe binaries and libraries are preinstalled in the same directories in the system root image and no further action is required at install time.

SPE Runtime Management Library version 2.2 is an upgrade to version 2.1. For more information, see the SPE Runtime Management Library Reference.

The traditional math functions are scalar instructions, and do not take advantage of the powerful Single Instruction, Multiple Data (SIMD) vector instructions available in both the PPU and SPU in the Cell BE Architecture. SIMD instructions perform computations on short vectors of data in parallel, instead of on individual scalar data elements. They often provide significant increases in program speed because more computation can be done with fewer instructions.

The SIMD math library provides short vector versions of the math functions. The MASS library provides long vector versions. These vector versions conform as closely as possible to the specifications set out by the scalar standards.

The SIMD math library is provided by the SDK as both a linkable library archive and as a set of inline function headers. The names of the SIMD math functions are formed from the names of the scalar counterparts by appending a vector type suffix to the standard scalar function name. For example, the SIMD version of the absolute value function abs(), which acts on a vector of long integers, is called absi4(). Inline versions of functions are prefixed with the character "_" (underscore), so the inline version of absi4() is called _absi4().

For more information about the SIMD math library, refer to SIMD Math Library Specification for Cell Broadband Engine Architecture Version 1.1.

The Mathematical Acceleration Subsystem (MASS) consists of libraries of mathematical intrinsic functions, which are tuned specifically for optimum performance on the Cell BE processor. Currently the 32-bit, 64-bit PPU, and SPU libraries are supported.

These libraries:

You can find information about using these libraries on the MASS Web site:

http://www.ibm.com/software/awdtools/mass

The ALF provides a programming environment for data and task parallel applications and libraries. The ALF API provides library developers with a set of interfaces to simplify library development on heterogenous multi-core systems. Library developers can use the provided framework to offload computationally intensive work to the accelerators. More complex applications can be developed by combining the several function offload libraries. Application programmers can also choose to implement their applications directly to the ALF interface.

ALF supports the multiple-program-multiple-data (MPMD) programming module where multiple programs can be scheduled to run on multiple accelerator elements at the same time.

The ALF functionality includes:

With the provided platform-independent API, you can also create descriptions for multiple compute tasks and define their ordering information execution orders by defining task dependency. Task parallelism is accomplished by having tasks without direct or indirect dependencies between them. The ALF runtime provides an optimal parallel scheduling scheme for the tasks based on given dependencies.

From the application or library programmer's point of view, ALF consists of the following two runtime components:

The host runtime library provides the host APIs to the application. The accelerator runtime library provides the APIs to the application's accelerator code, usually the computational kernel and helper routines. This division of labor enables programmers to specialize in different parts of a given parallel workload.

The ALF design enables a separation of work. There are three distinct types of task within a given application:

The runtime framework handles the underlying task management, data movement, and error handling, which means that the focus is on the kernel and the data partitioning, not the direct memory access (DMA) list creation or the lock management on the work queue.

The ALF APIs are platform-independent and their design is based on the fact that many applications targeted for Cell BE or multi-core computing follow the general usage pattern of dividing a set of data into self-contained blocks, creating a list of data blocks to be computed on the SPE, and then managing the distribution of that data to the various SPE processes. This type of control and compute process usage scenario, along with the corresponding work queue definition, are the fundamental abstractions in ALF.

The DaCS library provides a set of services for handling process-to-process communication in a heterogeneous multi-core system. In addition to the basic message passing service these include:

The DaCS services are implemented as a set of APIs providing an architecturally neutral layer for application developers They structure the processing elements, referred to as DaCS Elements (DE), into a hierarchical topology. This includes general purpose elements, referred to as Host Elements (HE), and special processing elements, referred to as Accelerator Elements (AE). Host elements usually run a full operating system and submit work to the specialized processes which run in the Accelerator Elements.

This section provides an overview of the following prototype libraries, which are shipped with SDK 3.0:

This prototype library handles a wide range of FFTs, and consists of the following:

The implementation manages sizes up to 10000 and handles multiples of 2, 3, and 5 as well as powers of those factors, plus one arbitrary factor as well. User code running on the PPU makes use of the CBE FFT library by calling one of either 1D or 2D streaming functions.

Both parts of the library run using a common interface that contains an initialization and termination step, and an execution step which can process "one-at-a-time" requests (streaming) or entire arrays of requests (batch).

Enter the following to view additional documentation for the prototype FFT library:

man /opt/cell/sdk/prototype/usr/include/libfft.3

The Monte Carlo libraries are a Cell BE implementation of Random Number Generator (RNG) algorithms and transforms. The objective of this library is to provide functions needed to perform Monte Carlo simulations.

The following RNG algorithms are implemented:

The following transforms are provided:

The example libraries package provides a set of optimized library routines that greatly reduce the development cost and enhance the performance of Cell BE programs.

To demonstrate the versatility of the Cell BE architecture, a variety of application-oriented libraries are included, such as:

Additional examples and demos show how you can exploit the on-chip computational capacity.

Both the binary and the source code are shipped in separate RPMs. The RPM names are:

For each of these, there is one RPM that has the binaries - already built versions, that are installed into /opt/cell/sdk/usr, and for each of these, there is one RPM that has the source in a tar file. For example, cell-demos-source-3.0-1.rpm has demos_source.tar and this tar file contains all of the source code.

The default installation process installs the binaries and installs the source tar files. You need to decide into which directory you want to untar those files, either into /opt/cell/sdk/src, or into a 'sandbox' directory.

The libraries and examples RPMs have been partitioned into the following subdirectories.

The following support libraries and utilities are provided by the SDK to help you with development and performance testing your Cell BE applications.

The SPU static timing tool, spu_timing, annotates an SPU assembly file with scheduling, timing, and instruction issue estimates assuming a straight, linear execution of the program. The tool generates a textual output of the execution pipeline of the SPE instruction stream from this input assembly file. Run spu_timing --help to see its usage syntax.

The SPU timing tool is located in the /opt/cell/sdk/usr/bin directory.

OProfile is a tool for profiling user and kernel level code. It uses the hardware performance counters to sample the program counter every N events. You specify the value of N as part of the event specification. The system enforces a minimum value on N to ensure the system does not get completely swamped trying to capture a profile.

Make sure you select a large enough value of N to ensure the overhead of collecting the profile is not excessively high.

The opreport tool produces the output report. Reports can be generated based on the file names that correspond to the samples, symbol names or annotated source code listings.

How to use OProfile and the postprocessing tool is described in the user manual available at:

http://oprofile.sourceforge.net/doc/

The current SDK 3.0 version of OProfile for Cell BE supports profiling on the POWER(TM) processor events and SPU cycle profiling. These events include cycles as well as the various processor, cache and memory events. It is possible to profile on up to four events simultaneously on the Cell BE system. There are restrictions on which of the PPU events can be measured simultaneously. (The tool now verifies that multiple events specified can be profiled simultaneously. In the previous release it was up to you to verify that.) When using SPU cycle profiling, events must be within the same group due to restrictions in the underlying hardware support for the performance counters. You can use the opcontrol -list-events command to view the events and which group contains each event.

There is one set of performance counters for each node that are shared between the two CPUs on the node. For a given profile period, only half of the time is spent collecting data for the even CPUs and half of the time for the odd CPUs. You may need to allow more time to collect the profile data across all CPUs.

opcontrol --start-daemon

When SPU cycle profiling is used, the opcontrol command is configured for separating the profile based on SPUs and on the library. This corresponds to the you specifying -separate=CPU and -separate=lib. The separate CPU is required because it is possible to have multiple SPU binary images embedded into the executable file or into a shared library. So for a given executable, the various SPUs may be running different SPU images.

With -separate=CPU, the image and corresponding symbols can be displayed for each SPU. The user can use the opreport -merge command to create a single report for all SPUs that shows the counts for each symbol in the various embedded SPU binaries. By default, opreport does not display the app name column when it reports samples for a single application, such as when it profiles a single SPU application. For opreport to attribute samples to a binary image, the opcontrol script defaults to using -separate=lib when profiling SPU applications so that the image name column is always displayed in the generated reports.

The report file uses the term CPUs when the event is SPU\_CYCLES. In this case, CPUs actually refer to the various SPUs in the system. For all other events, the CPU term refers to the virtual PPU processors.

With SPU profiling, opreport's --long-filenames option may not print the full path of the SPU binary image for which samples were collected. Short image names are used for SPU applications that employ the technique of embedding SPU images in another file (executable or shared library). The embedded SPU ELF data contains only the filename and no path information to the SPU binary file being embedded because this file may not exist or be accessible at runtime. You must have sufficient knowledge of the application's build process to be able to correlate the SPU binary image names found in the report to the application's source files.

Compile the application with -g and generate the OProfile report with -g to facilitate finding the right source file(s) to focus on.

Generally, when the report contains information about a single application, opreport does not include the report column for the application name. It is assumed that the performance analyst knows the name of the application being profiled.

The cell-perf-counter (cpc) tool is used for setting up and using the hardware performance counters in the Cell BE processor. These counters allow you to see how many times certain hardware events are occurring, which is useful if you are analyzing the performance of software running on a Cell BE system. Hardware events are available from all of the logical units within the Cell BE processor, including the PPE, SPEs, interface bus, and memory and I/O controllers. Four 32-bit counters, which can also be configured as pairs of 16-bit counters, are provided in the Cell BE performance monitoring unit (PMU) for counting these events.

The cpc tool also makes use of the hardware sampling capabilities of the Cell BE PMU. This feature allows the hardware to collect very precise counter data at programmable time intervals. The accumulated data can be used to monitor the changes in performance of the Cell BE system over longer periods of time.

The cpc tool provides a variety of output formats for the counter data. Simple text output is shown in the terminal session, HTML output is available for viewing in a Web browser, and XML output can be generated for use by higher-level analysis tools such as the Visual Performance Analyzer (VPA).

You can find details in the documentation and manual pages included with the cellperfctr-tools package, which can found in the /usr/share/doc/cellperfctr-<version>/ directory after you have installed the package.

IBM Eclipse IDE for the SDK is built upon the Eclipse and C Development Tools (CDT) platform. It integrates the GNU tool chain, compilers, the Full-System Simulator, and other development components to provide a comprehensive, Eclipse-based development platform that simplifies development. The key features include the following:

For information about how to install and remove the IBM Eclipse IDE for the SDK, see the SDK 3.0 Installation Guide.

For information about using the IDE, a tutorial is available. The IDE and related programs must be installed before you can access the tutorial.

The Cell Broadband Engine Architecture (CBEA) is an example of a multi-core hybrid system on a chip. That is to say, heterogeneous cores integrated on a single processor with an inherent memory hierarchy. Specifically, the synergistic processing elements (SPEs) can be thought of as computational accelerators for a more general purpose PPE core. These concepts of hybrid systems, memory hierarchies and accelerators can be extended more generally to coupled I/O devices, and examples of those systems exist today, for example, GPUs in PCIe slots for workstations and desktops. Similarly, the Cell BE processors is being used in systems as an accelerator, where computationally intensive workloads well suited for the CBEA are off-loaded from a more standard processing node. There are potentially many ways to move data and functions from a host processor to an accelerator processor and vice versa.

In order to provide a consistent methodology and set of application programming interfaces (APIs) for a variety of hybrid systems, including the Cell BE SoC hybrid system, the SDK has implementations of the Cell BE multi-core data communication and programming model libraries, Data and Communication Synchronization and Accelerated Library Framework, which can be used on x86/Linux host process systems with Cell BE-based accelerators. A prototype implementation over sockets is provided so that you can gain experience with this programming style and focus on how to manage the distribution of processing and data decomposition. For example, in the case of hybrid programming when moving data point to point over a network, care must be taken to maximize the computational work done on accelerator nodes potentially with asynchronous or overlapping communication, given the potential cost in communicating input and results.

For more information about the DaCS and ALF programming APIs, refer to Data and Communication Synchronization Library for Hybrid-x86 Programmer's Guide and API Reference and Accelerated Library Framework for Hybrid-x86 Programmer's Guide and API Reference.

This section is a short introduction about programming with the SDK. It covers the following topics:

Refer to the Cell BE Programming Tutorial, the Full-System Simulator User's Guide, and other documentation for more details.

Because of the cross-compile environment and simulator in the SDK, there are several different system root directories. Table 2 describes these directories.

| Directory name | Description |

|---|---|

| Host | The system root for the host system is "/". The SDK is installed relative to this host system root. |

| GCC Toolchain | The system root for the GCC tool chain depends on the host platform. For PPC platforms including the BladeCenter QS21, this directory is the same as the host system root. For x86 and x86-64 systems this directory is /opt/cell/sysroot. The tool chain PPU header and library files are stored relative to the GCC Tool chain system root in directories such as usr/include and usr/lib. The tool chain SPU header and library files are stored relative to the GCC Toolchain system root in directories such as usr/spu/include and usr/spu/lib. |

| Simulator | The simulator runs using a 2.6.22 kernel and a Fedora 7 system root image. This system root image has a root directory of "/". When this system root image is mounted into a host-based directory such as /mnt/cell-sdk-sysroot. This directory is the termed the simulator system root. |

| Examples and Libraries | The Examples and Libraries system root directory is /opt/cell/sysroot. When the samples and libraries are compiled and linked,

the resulting header files, libraries and binaries are placed relative to

this directory in directories such as usr/include, usr/lib, and /opt/cell/sdk/usr/bin. The libspe library is also

installed into this system root.

After you have logged in as root, you can synchronize this sysroot directory with the simulator sysroot image file. To do this, use the cellsdk_sync_simulator script with the synch task. The command is: opt/cell/cellsdk_sync_simulator This command is very useful whenever a library or sample has been recompiled. This script reduces user error because it provides a standard mechanism to mount the system root image, rsync the contents of the two corresponding directories, and unmount the system root image. |

To verify that the simulator is operating correctly and then run it, issue the following commands:

export PATH=/opt/ibm/systemsim-cell/bin:$PATH systemsim -g

The systemsim script found in the simulator's bin directory launches the simulator. The -g parameter starts the graphical user interface.

When the GUI is displayed, click Go to start the simulator.

You can use the simulator's GUI to get a better understanding of the Cell BE architecture. For example, the simulator shows two sets of PPE state. This is because the PPE processor core is dual-threaded and each thread has its own registers and context. You can also look at the state of the SPE's, including the state of their Memory Flow Controller (MFC).

The systemsim command syntax is:

systemsim [-f file] [-g] [-n]

where:

| Parameter | Description |

|---|---|

| -f <filename> | specifies an initial run script (TCL file) |

| -g | specifies GUI mode, otherwise the simulator starts in command-line mode |

| -n | specifies that the simulator should not open a separate console window |

You can find documentation about the simulator including the user's guide in the /opt/ibm/systemsim-cell/doc directory.

The callthru utility allows you to copy files to or from the simulated system while it is running. The utility is located in the simulator system root image in the /usr/bin directory.

If you call the utility as:

Redirecting appropriately lets you copy files to and from the host. For example, when the simulator is running on the host, you could copy a Cell BE application into /tmp:

cp matrix_mul /tmp

Then, in the console window of the simulated system, you could access it as follows:

callthru source /tmp/matrix_mul > matrix_mul chmod +x matrix_mul ./matrix_mul

The /tmp directory is shown as an example only.

The source files for the callthru utility are in /opt/ibm/systemsim-cell/sample/callthru. The callthru utility is built and installed onto the sysroot disk as part of the SDK installation process.

By default the simulator does not write changes back to the simulator system root (sysroot) image. This means that the simulator always begins in the same initial state of the sysroot image. When necessary, you can modify the simulator configuration so that any file changes made by the simulated system to the sysroot image are stored in the sysroot disk file so that they are available to subsequent simulator sessions.

To specify that you want update the sysroot image file with any changes made in the simulator session, change the newcow parameter on the mysim bogus disk init command in .systemsim.tcl to rw (specifying read/write access) and remove the last two parameters. The following is the changed line from .systemsim.tcl:

mysim bogus disk init 0 $sysrootfile rw

When running the simulator with read/write access to the sysroot image file, you must ensure that the file system in the sysroot image file is not corrupted by incomplete writes or a premature shutdown of the Linux operating system running in the simulator. In particular, you must be sure that Linux writes any cached data out to the file system before exiting the simulator. To do this, issue "sync ; sync" in the Linux console window just before you exit the simulator.

By default the simulator provides an environment that simulates one Cell BE processor. To simulate an environment where two Cell BE processors exist, similar to a BladeCenter QS21, you must enable Symmetric Multiprocessing (SMP) support. A tcl run script, config_smp.tcl, is provided with the simulator to configure it for SMP simulation. For example, following sequence of commands will start the simulator configured with a graphical user interface and SMP.

export PATH=$PATH:/opt/ibm/systemsim/bin systemsim -g -f config_smp.tcl

When the simulator is started, it has access to sixteen SPEs across two Cell BE processors.

To enable xclients from the simulator, you need to configure BogusNet (see the BogusNet HowTo), and then perform the following configuration steps:

echo 1 > /proc/sys/net/ipv4/ip_forward

iptables -t nat -A POSTROUTING -o eth0 -j MASQUERADE iptables -A FORWARD -i eth0 -o tap0 -m state --state RELATED,ESTABLISHED -j ACCEPT iptables -A FORWARD -i tap0 -o eth0 -j ACCEPT

Many of the tools provided in SDK 3.0 support multiple implementations of the CBEA. These include the Cell BE processor and a future processor. This future processor is a CBEA-compliant processor with a fully pipelined, enhanced double precision SPU.

The processor supports five optional instructions to the SPU Instruction Set Architecture. These include:

Detailed documentation for these instructions is provided in version 1.2 (or later) of the Synergistic Processor Unit Instruction Set Architecture specification. The future processor also supports improved issue and latency for all double precision instructions.

The SDK compilers support compilation for either the Cell BE processor or the future processor.

| Options | Description |

|---|---|

| -march=<cpu type> | Generate machine code for the SPU architecture specified by the CPU type. Supported CPU types are either cell (default) or celledp, corresponding to the Cell BE processor or future processor, respectively. |

| -mtune=<cpu type> | Schedule instructions according to the pipeline model of the specified CPU type. Supported CPU types are either cell (default) or celledp, corresponding to the Cell BE processor or future processor, respectively. |

| Option | Description |

|---|---|

| -qarch=<cpu type> | Generate machine code for the SPU architecture specified by the CPU type. Supported CPU types are either spu (default) or edp, corresponding to the Cell BE processor or future processor, respectively. |

| -qtune=<cpu type> | Schedule instructions according to the pipeline model of the specified CPU type. Supported CPU types are either spu (default) or edp, corresponding to the Cell BE processor or future processor, respectively. |

The simulator also supports simulation of the future processor. The simulator installation provides a tcl run script to configure it for such simulation. For example, the following sequence of commands start the simulator configured for the future processor with a graphical user interface.

export PATH=$PATH:/opt/ibm/systemsim-cell/bin systemsim -g -f config_edp_smp.tcl

The static timing analysis tool, spu_timing, also supports multiple processor implementations. The command line option -march=celledp can be used to specify that the timing analysis be done corresponding to the future processors' enhanced pipeline model. If the architecture is unspecified or invoked with the command line option -march=cell, then analysis is done corresponding to the Cell BE processor's pipeline model.

When you develop SPE programs using the SDK, you may wish to reference variables in the PPE address space from code running on an SPE. This is achieved through an extension to the C language syntax.

It might be desirable to share data in this way between an SPE and the PPE. This extension makes it easier to pass pointers so that you can use the PPE to perform certain functions on behalf of the SPE. You can readily share data between all SPEs through variables in the PPE address space.

The compiler recognizes an address space identifier __ea that can be used as an extra type qualifier like const or volatile in type and variable declarations. You can qualify variable declarations in this way, but not variable definitions.

The following are examples.

/* Variable declared on the PPE side. */ extern __ea int ppe_variable; /* Can also be used in typedefs. */ typedef __ea int ppe_int; /* SPE pointer variable pointing to memory in the PPE address space */ __ea int *ppe_pointer;

Pointers in the SPE address space can be cast to pointers in the PPE address space. Doing this transforms an SPE address into an equivalent address in the mapped SPE local store (in the PPE address space). The following is an example.

int x; __ea int *ppe_pointer_to_x = &x;

These pointer variables can be passed to the PPE process by way of a mailbox and used by PPE code. With this method, you can perform operations in the PPE execution context such as copying memory from one region of the SPE local store to another.

In the same way, these pointers can be converted to and from the two address spaces, as follows:

int *spe_x; spe_x = (int *) ppe_pointer_to_x;

References to __ea variables cause decreased performance. The implementation performs software caching of these variables, but there are much higher overheads when the variable is accessed for the first time. Modifications to __ea variables is also cached. The writeback of such modifications to PPE address space may be delayed until the cache line is flushed, or the SPU context terminates.

GCC for the SPU provides the following command line options to control the runtime behavior of programs that use the __ea extension. Many of these options specify parameters for the software-managed cache. In combination, these options cause GCC to link your program to a single software-managed cache library that satisfies those options. Table 5 describes these options.

| Option | Description |

|---|---|

| -mea32 | Generate code to access variables in 32-bit PPU objects. The compiler defines a preprocessor macro __EA32__ to allow applications to detect the use of this option. This is the default. |

| -mea64 | Generate code to access variables in 64-bit PPU objects. The compiler defines a preprocessor macro __EA64__ to allow applications to detect the use of this option. |

| -mcache-size=8 | Specify an 8 KB cache size. |

| -mcache-size=16 | Specify an 16 KB cache size. |

| -mcache-size=32 | Specify an 32 KB cache size. |

| -mcache-size=64 | Specify an 64 KB cache size. |

| -mcache-size=128 | Specify an 128 KB cache size. |

| -matomic-updates | Use DMA atomic updates when flushing a cache line back to PPU memory. This is the default. |

-mno-atomic-updates |

This negates the -matomic-updates option. |

Accessing an __ea variable from an SPU program creates a copy of this value in the local storage of the SPU. Subsequent modifications to the value in main storage are not automatically reflected in the copy of the value in local store. It is your responsibility to ensure data coherence for __ea variables that are accessed by both SPE and PPE programs.

A complete example using __ea qualifiers to implement a quick sort algorithm on the SPU accessing PPE memory can be found in the examples/ppe_address_space directory provided by the SDK 3.0 cell-examples tar ball.

Each of the examples and demos has an associated README.txt file. There is also a top-level readme in the /opt/cell/sdk/src directory, which introduces the structure of the example code source tree.

Almost all of the examples run both within the simulator and on the BladeCenter QS21. Some examples include SPU-only programs that can be run on the simulator in standalone mode.

The source code, which is specific to a given Cell BE processor unit type, is in the corresponding subdirectory within a given example's directory:

In /opt/cell/sdk/buildutils there are some top level Makefiles that control the build environment for all of the examples. Most of the directories in the libraries and examples contain a Makefile for that directory and everything below it. All of the examples have their own Makefile but the common definitions are in the top level Makefiles.

The build environment Makefiles are documented in /opt/cell/sdk/buildutils/README_build_env.txt.

Environment variables in the /opt/cell/sdk/buildutils/make.* files are used to determine which compiler is used to build the examples.

The /opt/cell/sdk/buildutils/cellsdk_select_compiler script can be used to switch the compiler. The syntax of this command is:

/opt/cell/sdk/buildutils/cellsdk_select_compiler [xlc | gcc]

where the xlc flag selects the XL C/C++ compiler and the gcc flag selects the GCC compiler. The default, if unspecified, is to compile the examples with the GCC compiler.

After you have selected a particular compiler, that same compiler is used for all future builds, unless it is specifically overwritten by shell environment variables, SPU_COMPILER, PPU_COMPILER, PPU32_COMPILER, or PPU64_COMPILER.

You do not need to build all the example code at once, you can build each program separately. To start from scratch, issue a make clean using the Makefile in the /opt/cell/sdk/src directory or anywhere in the path to a specific library or sample.

If you have performed a make clean at the top level, you need to rebuild the include files and libraries first before you compile anything else. To do this run a make in the src/include and src/lib directories.

This release of the GNU tool chain includes a GCC compiler and utilities that optimize code for the Cell BE processor. These are:

The example below shows the steps required to create the executable program simple which contains SPU code, simple_spu.c, and PPU code, simple.c.

/usr/bin/spu-gcc -g -o simple_spu simple_spu.c

/usr/bin/ppu32-embedspu simple_spu simple_spu simple_spu-embed.o

/usr/bin/ppu32-gcc -g -o simple simple.c simple_spu-embed.o -lspe

/usr/bin/ppu32-gcc -g -o simple simple.c simple_spu -lspe

The SDK supports the huge translation lookaside buffer (TLB) file system, which allows you to reserve 16 MB huge pages of pinned, contiguous memory. This feature is particularly useful for some Cell BE applications that operate on large data sets, such as the FFT16M workload sample.

To configure the BladeCenter QS21 for 20 huge pages (320 MB), run the following commands:

mkdir -p /huge echo 20 > /proc/sys/vm/nr_hugepages mount -t hugetlbfs nodev /huge

If you have difficulties configuring adequate huge pages, it could be that the memory is fragmented and you need to reboot.

You can add the command sequence shown above to a startup initialization script, such as /etc/rc.d/rc.sysinit, so that the huge TLB file system is configured during the system boot.

To verify the large memory allocation, run the command cat /proc/meminfo. The output is similar to:

MemTotal: 1010168 kB MemFree: 155276 kB . . . HugePages_Total: 20 HugePages_Free: 20 Hugepagesize: 16384 kB

Huge pages are allocated by invoking mmap of a /huge file of the specified size. For example, the following code sample allocates 32 MB of private huge paged memory :

int fmem;

char *mem_file = "/huge/myfile.bin";

fmem = open(mem_file, O_CREAT | O_RDWR, 0755)) == -1) {

remove(mem_file);

ptr = mmap(0, 0x2000000, PROT_READ | PROT_WRITE, MAP_PRIVATE, fmem, 0);

mmap succeeds even if there are insufficient huge pages to satisfy the request. On first access to a page that can not be backed by huge TLB file system, the application is "killed". That is, the process is terminated and the message "killed" is emitted. You must be ensure that the number of huge pages requested does not exceed the number available. Furthermore, on a BladeCenter QS20 and BladeCenter QS21 , the huge pages are equally distributed across both Non-Uniform Memory Architecture (NUMA) memory nodes. Applications that restrict memory allocation to a specific node find that the number of available huge pages for the specific node is half of what is reported in /proc/meminfo.

This section documents some best practices in terms of developing applications using the SDK. See also developerWorks articles about programming tips and best practices for writing Cell BE applications at

http://www.ibm.com/developerworks/power/cell/

Multiple users should not update the common simulator sysroot image file by mounting it read-write in the simulator. For shared development environments, the callthru utility (see The callthru utility) can be used to get files in and out of the simulator. Alternatively, users can copy the sysroot image file to their own sandbox area and then mount this version with read/write permissions to make persistent updates to the image.

If multiple users need to run Cell BE applications on a BladeCenter QS21, you need a machine reservation mechanism to reduce collisions between two people who are using SPEs at the same time. This is because SPE threads are not fully preemptable in this version of the SDK.

The following sections discusses the following performance considerations that you should take into account when you are developing applications:

The BladeCenter QS20 and BladeCenter QS21 are both Non-Uniform Memory Architecture (NUMA) systems, which consist of two Cell BE processors, each with its own system memory. The two processors are interconnected thru a FlexIO interface using the fully coherent BIF protocol. The bandwidth between processor elements or processor elements and memory is greater if accesses are local and do not have to communicate across the FlexIO. In addition, the access latency is slightly higher on node 1 (Cell BE 1) as compared to node 0 (Cell BE 0) regardless of whether they are local or non-local.

To maximize the performance of a single application, you can specify CPU and memory binding to either reduce FlexIO traffic or exploit the aggregated bandwidth of the memory available on both nodes. You can specify the Linux scheduling policy or memory placement either through application-specified NUMA policy library calls (man numa(3)) or using the numactl command (man numactl(8)).

For example, the following command invokes a program that allocates all CPUs on node 0 with a preferred memory allocation on node 0:

numactl --cpunodebind=0 --preferred=0 <program name>

Choosing an optimal NUMA policy depends upon the application's communication and data access patterns. However, you should consider the following general guidelines:

The Linux operating system provides preemptive context switching of virtualized SPE contexts that each resemble the functionality provided by a physical SPE, but there are limitations to the degree to which the architected hardware interfaces can be used in a virtualized environment.

In particular, memory mapped I/O on the problem state register area and MFC proxy DMA access can only be used while the SPE context is both running in a thread and not preempted, otherwise the thread trying to perform these operations blocks until the conditions are met.

This can result in poor performance and deadlocks for programs that over-commit SPEs and rely on SPE thread communications and synchronization. In this case, you should avoid running more SPE threads then there are physical SPEs.

This section describes how to debug Cell BE applications. It describes the following:

GDB is the standard command-line debugger available as part of the GNU development environment. GDB has been modified to allow debugging in a Cell BE processor environment and this section describes how to debug Cell BE software using the new and extended features of the GDBs which are supplied with SDK 3.0.

Debugging in a Cell BE processor environment is different from debugging in a multithreaded environment, because threads can run either on the PPE or on the SPE.

There are three versions of GDB which can be installed on a BladeCenter QS21:

This section also describes how to run applications under gdbserver. The gdbserver program allows remote debugging.

The GDB program released with SDK 3.0 replaces previous versions and contains the following enhancements:

The linker embeds all the symbolic and additional information required for the SPE binary within the PPE binary so it is available for the debugger to access when the program runs. You should use the -g option when compiling both SPE and PPE code with GCC or XLC. W The -g option adds debugging information to the binary which then enables GDB to lookup symbols and show the symbolic information. When you use the toplevel Makefiles of the SDK, you can specify the -g option on compilation commands by setting the CC_OPT_LEVEL makefile variable to -g.

When you use the top level Makefiles of the SDK, you can specify the -g option on compilation by setting the CC_OPT_LEVEL Makefile variable to -g.

For more information about compiling with GCC, see Compiling and linking with the GNU tool chain.

This section assumes that you are familiar with the standard features of GDB. It describes the following topics:

There are several ways to debug programs designed for the Cell BE processor. If you have access to Cell BE hardware, you can debug directly using ppu-gdb . You can also run the application under ppu-gdb inside the simulator. Alternatively, you can debug remotely as described in Setting up remote debugging.

Whichever method you choose, after you have started the application under ppu-gdb, you can use the standard GDB commands available to debug the application. The GDB manual is available at the GNU Web site

http://www.gnu.org/software/gdb/gdb.html

and there are many other resources available on the World Wide Web.

Standalone SPE programs or spulets are self-contained applications that run entirely on the SPE. Use spu-gdb to launch and debug standalone SPE programs in the same way as you use ppu-gdb on PPE programs.

The examples in this section use a standalone SPE (spulet) program, simple.c, whose source code and Makefile are given below:

Source code:

#include <stdio.h>

#include <spu_intrinsics.h>

unsigned int

fibn(unsigned int n)

{

if (n <= 2)

return 1;

return (fibn (n-1) + fibn (n-2));

}

int

main(int argc, char **argv)

{

unsigned int c;

c = fibn (8);

printf ("c=%d\n", c);

return 0;

}

Makefile:

simple: simple.c

spu-gcc simple.c -g -o simple

Source-level debugging of SPE programs with spu-gdb is similar in nearly all aspects to source-level debugging for the PPE. For example, you can:

The following example illustrates the backtrace output for the simple.c standalone SPE program.

$ spu-gdb ./simple

GNU gdb 6.7

Copyright (C) 2006 Free Software Foundation, Inc.

GDB is free software, covered by the GNU General Public License, and you are

welcome to change it and/or distribute copies of it under certain conditions.

Type "show copying" to see the conditions.

There is absolutely no warranty for GDB. Type "show warranty" for details.

This GDB was configured as "--host=powerpc64-unknown-linux-gnu --target=spu"...

(gdb) break 8

Breakpoint 1 at 0x184: file simple.c, line 8.

(gdb) break 18

Breakpoint 2 at 0x220: file simple.c, line 18.

(gdb) run

Starting program: /home/usr/md/fib/simple

Breakpoint 1, fibn (n=2) at simple.c:8

8 return 1;

(gdb) backtrace

#0 fibn (n=2) at simple.c:8

#1 0x000001a0 in fibn (n=3) at simple.c:10

#2 0x000001a0 in fibn (n=4) at simple.c:10

#3 0x000001a0 in fibn (n=5) at simple.c:10

#4 0x000001a0 in fibn (n=6) at simple.c:10

#5 0x000001a0 in fibn (n=7) at simple.c:10

#6 0x000001a0 in fibn (n=8) at simple.c:10

#7 0x0000020c in main (argc=1, argv=0x3ffe0) at simple.c:16

(gdb) delete breakpoint 1

(gdb) continue

Continuing.

Breakpoint 2, main (argc=1, argv=0x3ffe0) at simple.c:18

18 printf("c=%d\n", c);

(gdb) print c

$1 = 21

(gdb)

The spu-gdb program also supports many of the familiar techniques for debugging SPE programs at the assembler code level. For example, you can:

The following example illustrates some of these facilities.

$ spu-gdb ./simple

GNU gdb 6.7

Copyright (C) 2007 Free Software Foundation, Inc.

GDB is free software, covered by the GNU General Public License, and you are

welcome to change it and/or distribute copies of it under certain conditions.

Type "show copying" to see the conditions.

There is absolutely no warranty for GDB. Type "show warranty" for details.

This GDB was configured as "--host=powerpc64-unknown-linux-gnu --target=spu"...

(gdb) br 18

Breakpoint 1 at 0x220: file simple.c, line 18.

(gdb) r

Starting program: /home/usr/md/fib/simple

Breakpoint 1, main (argc=1, argv=0x3ffe0) at simple.c:18

18 printf("c=%d\n", c);

(gdb) print c

$1 = 21

(gdb) x /8i $pc

0x220 <main+72>: ila $3,0x8c0 <_fini+32>

0x224 <main+76>: lqd $4,32($1) # 20

0x228 <main+80>: brsl $0,0x2a0 <printf> # 2a0

0x22c <main+84>: il $2,0

0x230 <main+88>: ori $3,$2,0

0x234 <main+92>: ai $1,$1,80 # 50

0x238 <main+96>: lqd $0,16($1)

0x23c <main+100>: bi $0

(gdb) nexti

0x00000224 18 printf("c=%d\n", c);

(gdb) nexti

0x00000228 18 printf("c=%d\n", c);

(gdb) print $r4$1 = {uint128 = 0x0003ffd0000000001002002000000030, v2_int64 = {

1125693748412416, 1153484591999221808}, v4_int32 = {262096, 0, 268566560,48},

v8_int16 = {3, -48, 0, 0, 4098, 32, 0, 48},v16_int8 =

"\000\003??\000\000\000\000\020\002\000 \000\000\0000",

v2_double = {5.5616660882883401e-309, 1.4492977868115269e-231},

v4_float = {

3.67274722e-40, 0, 2.56380757e-29, 6.72623263e-44}}

Because each SPE register can hold multiple fixed or floating point values of several different sizes, spu-gdb treats each register as a data structure that can be accessed with multiple formats. The spu-gdb ptype command, illustrated in the following example, shows the mapping used for SPE registers:

(gdb) ptype $r80

type = union __spu_builtin_type_vec128 {

int128_t uint128;

int64_t v2_int64[2];

int32_t v4_int32[4];

int16_t v8_int16[8];

int8_t v16_int8[16];

double v2_double[2];

float v4_float[4];

}

To display or update a specific vector element in an SPE register, specify the appropriate field in the data structure, as shown in the following example:

(gdb) p $r80.uint128 $1 = 0x00018ff000018ff000018ff000018ff0 (gdb) set $r80.v4_int32[2]=0xbaadf00d (gdb) p $r80.uint128 $2 = 0x00018ff000018ff0baadf00d00018ff0

SPU local store space is limited. Allocating too much stack space limits space available for code and data. Allocating too little stack space can cause runtime failures. To help you allocate stack space efficiently, the SPU linker provides an estimate of maximum stack usage when it is called with the option --stack-analysis. The value returned by this command is not guaranteed to be accurate because the linker analysis does not include dynamic stack allocation such as that done by the alloca function. The linker also does not handle calls made by function pointers or recursion and other cycles in the call graph. However, even with these limitations, the estimate can still be useful. The linker provides detailed information on stack usage and calls in a linker map file, which can be enabled by passing the parameter -Map <filename> to the linker. This extra information combined with known program behavior can help you to improve on the linker's simple analysis.

For the following simple program, hello.c:

#include <stdio.h>

#include <unistd.h>

int foo (void)

{

printf (" world\n");

printf ("brk: %x\n", sbrk(0));

(void) fgetc (stdin);

return 0;

}

int main (void)

{

printf ("Hello");

return foo ();

}

The command spu-gcc -o hello -O2 -Wl,--stack-analysis,-Map,hello.map hello.c generates the following output:

Stack size for call graph root nodes. _start: 0x540 _fini: 0x40 call___do_global_dtors_aux: 0x20 call_frame_dummy: 0x20 __sfp: 0x0 __cleanup: 0xc0 call___do_global_ctors_aux: 0x20 Maximum stack required is 0x540

This output shows that the main entry point _start will require 0x540 bytes of stack space below __stack. There are also a number of other root nodes that the linker fails to connect into the call graph. These are either functions called through function pointers, or unused functions. _fini, registered with atexit() and called from exit, is an example of the former. All other nodes here are unused.

The hello.map section for stack analysis shows:

Stack size for functions. Annotations: '*' max stack, 't' tail call

_exit: 0x0 0x0

__call_exitprocs: 0xc0 0xc0

exit: 0x30 0xf0

calls:

_exit

* __call_exitprocs

__errno: 0x0 0x0

__send_to_ppe: 0x40 0x40

calls:

__errno

__sinit: 0x0 0x0

fgetc: 0x40 0x80

calls:

* __send_to_ppe

__sinit

printf: 0x4c0 0x500

calls:

* __send_to_ppe

sbrk: 0x20 0x20

calls:

__errno

puts: 0x30 0x70

calls:

* __send_to_ppe

foo: 0x20 0x520

calls:

fgetc

* printf

sbrk

puts

main: 0x20 0x520

calls:

*t foo

printf

__register_exitproc: 0x0 0x0

atexit: 0x0 0x0

calls:

t __register_exitproc

__do_global_ctors_aux: 0x30 0x30

frame_dummy: 0x20 0x20

_init: 0x20 0x50

calls:

* __do_global_ctors_aux

frame_dummy

_start: 0x20 0x540

calls:

exit

* main

atexit

_init

__do_global_dtors_aux: 0x20 0x20

_fini: 0x20 0x40

calls:

* __do_global_dtors_aux

call___do_global_dtors_aux: 0x20 0x20

call_frame_dummy: 0x20 0x20

__sfp: 0x0 0x0

fclose: 0x40 0x80

calls:

* __send_to_ppe

__sinit

__cleanup: 0x40 0xc0

calls:

* fclose

call___do_global_ctors_aux: 0x20 0x20

This analysis shows that in the entry for the main function, main requires 0x20 bytes of stack. The total program requires a total of 0x520 bytes including all called functions. The function called from main that requires the maximum amount of stack space is foo, which main calls through thetail function call. Tail calls occur after the local stack for the caller is deallocated. Therefore, the maximum stack space allocated for main is the same as the maximum stack space allocated for foo. The main function also calls the printf function.

If you are uncertain whether the _fini function might require more stack space than main, trace down from the _start function to the __call_exitprocs function (where _fini is called) to find the stack requirement for that code path. The stack size is 0x20 (local stack for _start) plus 0x30 (local stack for exit) plus 0xC0 (local stack for __call_exitprocs) plus 0x40 (total stack for _fini), or 0x150 bytes. Therefore, the stack is sufficient for _fini.

If you pass the --emit-stack-syms option to the linker, it will save the stack sizing information in the executable for use by post-link tools such as FDPRPro. With this option specified, the linker creates symbols of the form __stack_<function_name> for global functions, and __stack_<number>_<function_name> for static functions. The value of these symbols is the total stack size requirement for the corresponding function.

You can link against these symbols. The following is an example.

extern void __stack__start;

printf ("Total stack is %ld\n", (long) &__stack__start);

The SPE stack shares local storage with the application's code and data. Because local storage is a limited resource and lacks hardware-enabled protection it is possible to overflow the stack and corrupt the program's code or data or both. This often results in hard to debug problems because the effects of the overflow are not likely to be observed immediately.

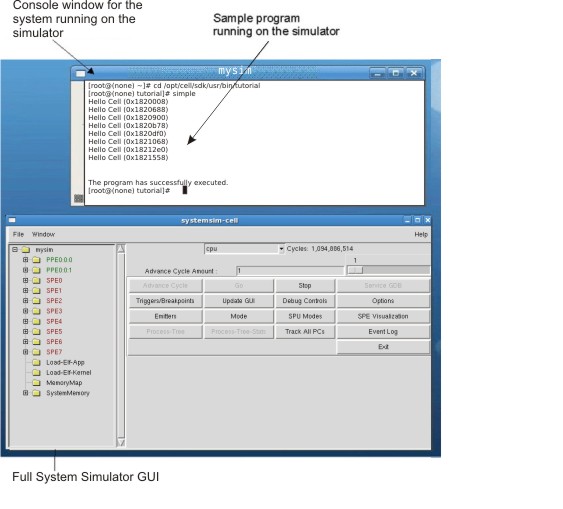

To understand how to debug stack overflows, it is important to understand how the SPE local storage is allocated and the stack is managed, see Figure 2.

The area between the linker symbol that marks the end of the programs code and data sections, _end, and the top of local storage is dynamic storage. This dynamic storage is used for two purposes, the stack and the malloc heap. The stack grows downward (from high addressed memory to low addressed memory), and the malloc heap grows upward.

The C runtime startup code (crt0) initializes the stack pointer register (register 1) such that word element 0 contains the current stack pointer and word element 1 contains the number of dynamic storage bytes currently available. The stack pointer and space available is negatively adjusted when a stack frame is acquired and positively adjusted when a stack frame is released. The space available is negatively adjusted, up to the available space, whenever the malloc heap is enlarged.

During application development it is advisable that you use stack overflow checking and then disable it when the application is released. Because the spu-gcc and spuxlc compilers do not by default emit code to detect stack overflow, you must include a compile line option:

Stack checking introduces additional code to test for stack overflow. The additional code halts execution conditional on the available space going negative as a result of acquiring or enlarging a stack frame.

For a standalone SPU program, the occurrence of a halt results in a "spe_run: Bad address" message and exit code of SPE_SPU_HALT (4).

For SPE contexts being run from a PPE program, a stack overflow results in a stopinfo, stop_reason of SPE_RUNTIME_ERROR with a spe_runtime_error equal to SPE_SPU_HALT. See the spe_context_run subroutine specification of the SPE Runtime Management Library for additional details.

To reduce the occurrence of stack overflows, you should consider the following strategies:

To debug combined code, that is code containing both PPE and SPE code, you must use ppu-gdb.

Typically a simple program contains only one thread. For example, a PPU "hello world" program is run in a process with a single thread and the GDB attaches to that single thread.

On many operating systems, a single program can have more than one thread. The ppu-gdb program allows you to debug programs with one or more threads. The debugger shows all threads while your program runs, but whenever the debugger runs a debugging command, the user interface shows the single thread involved. This thread is called the current thread. Debugging commands always show program information from the point of view of the current thread. For more information about GDB support for debugging multithreaded programs, see the sections 'Debugging programs with multiple threads' and 'Stopping and starting multi-thread programs' of the GDB User's Manual, available at

http://www.gnu.org/software/gdb/gdb.html

The info threads command displays the set of threads that are active for the program, and the thread command can be used to select the current thread for debugging.

On the Cell BE processor, a thread can run on either the PPE or on an SPE at any given point in time. All threads, both the main thread of execution and secondary threads started using the pthread library, will start execution on the PPE. Execution can switch from the PPE to an SPE when a thread executes the spe_context_run function. See the libspe2 manual for details. Conversely, a thread currently executing on an SPE may switch to use the PPE when executing a library routine that is implemented via the PPE-assisted call mechanism See the Cell BE Linux Reference Implementation ABI document for details. When you choose a thread to debug, the debugger automatically detects the architecture the thread is currently running on. If the thread is currently running on the PPE, the debugger will use the PowerPC architecture. If the thread is currently running on an SPE, the debugger will use the SPE architecture. A thread that is currently executing code on an SPE may also be referred to as an SPE thread.

To see which architecture the debugger is using, use the show architecture command.

Example: show architecture

The example below shows the results of the show architecture command at two different breakpoints in a program. At breakpoint 1 the program is executing in the original PPE thread, where the show architecture command indicates that architecture is powerpc:common. The program then spawns an SPE thread which will execute the SPU code in simple_spu.c. When the debugger detects that the SPE thread has reached breakpoint 3, it switches to this thread and sets the architecture to spu:256K For more information about breakpoint 2, see Setting pending breakpoints.

[user@localhost simple]$ ppu-gdb ./simple

...

...

...

(gdb) break main

Breakpoint 1 at 0x1801654: file simple.c, line 23.

(gdb) run

Starting program: /home/user/md/simple/simple

[Thread debugging using libthread_db enabled]

[New Thread 4160655360 (LWP 2490)]

[Switching to Thread 4160655360 (LWP 2490)]

Breakpoint 1, main (argc=1, argv=0xfff7a9e4) at simple.c:23

23 int i, status = 0;

(gdb) show architecture

The target architecture is set automatically (currently powerpc:common)

(gdb) break simple_spu.c:5

No source file named simple_spu.c.

Make breakpoint pending on future shared library load? (y or [n]) y

Breakpoint 2 (simple_spu.c:5) pending.

(gdb) continue

Continuing.

Breakpoint 3 at 0x158: file simple_spu.c, line 5.

Pending breakpoint "simple_spu.c:5" resolved

[New Thread 4160390384 (LWP 2495)]

[Switching to Thread 4160390384 (LWP 2495)]

Breakpoint 3, main (id=103079215104) at simple_spu.c:13

13 {

(gdb) show architecture

The target architecture is set automatically (currently spu:256K)

(gdb)

As described in Debugging architecture, any thread of a combined Cell BE application is executing either on the PPE or an SPE at the time the debugger interrupted execution of the process currently being debugged. This determines the main architecture GDB will use when examining the thread. However, during the execution history of that thread, execution may have switched between architectures one or multiple times. When looking at the thread's stack backtrace (using the backtrace command), the debugger will reflect those switches. It will show stack frames belonging to both the PPE and SPE architectures.

Example: An SPE context is interrupted by the debugger while executing a PPE-assisted scanf call

(gdb) backtrace #0 0x0ff1a8e8 in __read_nocancel () from /lib/libc.so.6 #1 0x0feb7e04 in _IO_new_file_underflow (fp=<value optimized out>) at fileops.c:590 #2 0x0feb82c0 in _IO_default_uflow (fp=<value optimized out>) at genops.c:435 #3 0x0feba518 in *__GI___uflow (fp=<value optimized out>) at genops.c:389 #4 0x0fe9b834 in _IO_vfscanf_internal (s=<value optimized out>, format=<value optimized out>, argptr=<value optimized out>, errp=<value optimized out>) at vfscanf.c:542 #5 0x0fe9f858 in ___vfscanf (s=<value optimized out>, format=<value optimized out>, argptr=<value optimized out>) at vfscanf.c:2473 #6 0x0fe18688 in __do_vfscanf (stream=<value optimized out>, format=<value optimized out>, vlist=<value optimized out>) at default_c99_handler.c:284 #7 0x0fe1ab38 in default_c99_handler_vscanf (ls=<value optimized out>, opdata=<value optimized out>) at default_c99_handler.c:1193 #8 0x0fe176b0 in default_c99_handler (base=<value optimized out>, offset=<value optimized out>) at default_c99_handler.c:1990 #9 0x0fe1f1b8 in handle_library_callback (spe=<value optimized out>, callnum=<value optimized out>, npc=<value optimized out>) at lib_builtin.c:152 #10 <cross-architecture call> #11 0x0003fac4 in ?? () #12 0x00000360 in scanf (fmt=<value optimized out>) at ../../../../../../src/newlib/libc/machine/spu/scanf.c:74 #13 0x00000170 in main () at test.c:8

When you choose a particular stack frame to examine using the frame, up, or down commands, the debugger switches its notion of the current architecture as appropriate for the selected frame. For example, if you use the info registers command to look at the selected frame's register contents, the debugger shows the SPE register set if the selected frame belongs to an SPE context, and the PPE register set if the selected frame belongs to PPE code.

Example: continued

gdb) frame 7

#7 0x0fe1ab38 in default_c99_handler_vscanf (ls=<value optimized out>,

opdata=<value optimized out>)

at default_c99_handler.c:1193

1193 default_c99_handler.c: No such file or directory.

in default_c99_handler.c

(gdb) show architecture

The target architecture is set automatically (currently powerpc:common)

(gdb) info registers

r0 0x3 3

r1 0xfec2eda0 4274187680

r2 0xfff9ba0 268409760

r3 0x200 512

<...>

(gdb) frame 13

#13 0x00000170 in main () at test.c:8

8 scanf ("%d\n", &x);

(gdb) show architecture

The target architecture is set automatically (currently spu:256K)

(gdb) info registers

r0 {uint128 = 0x00000170000000000000000000000000, v2_int64 = {0x17000000000, 0x0},

v4_int32 = {0x170, 0x0, 0x0, 0x0}, v8_int16 = {0x0, 0x170, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0},

v16_int8 = {0x0, 0x0, 0x1, 0x70, 0x0 <repeats 12 times>}, v2_double = {0x0, 0x0},

v4_float = {0x0, 0x0, 0x0, 0x0}}

r1 {uint128 = 0x0003ffa0000000000000000000000000, v2_int64 = {0x3ffa000000000, 0x0},

v4_int32 = {0x3ffa0, 0x0, 0x0, 0x0}, v8_int16 = {0x3, 0xffa0, 0x0, 0x0, 0x0, 0x0, 0x0, 0x0},

v16_int8 = {0x0, 0x3, 0xff, 0xa0, 0x0 <repeats 12 times>}, v2_double = {0x0, 0x0},

v4_float = {0x0, 0x0, 0x0, 0x0}}

<...>

Compiling with the '-g' option adds debugging information to the binary that enables GDB to lookup symbols and show the symbolic information.

The debugger sees SPE executable programs as shared libraries. The info sharedlibrary command shows all the shared libraries including the SPE executables when running SPE threads.

Example: info sharedlibrary

The example below shows the results of the info sharedlibrary command at two breakpoints on one thread. At breakpoint 1, the thread is running on the PPE, at breakpoint 3 the thread is running on the SPE. For more information about breakpoint 2, see Setting pending breakpoints.

(gdb) break main

Breakpoint 1 at 0x1801654: file simple.c, line 23.

(gdb) r

Starting program: /home/user/md/simple/simple

[Thread debugging using libthread_db enabled]

[New Thread 4160655360 (LWP 2528)]

[Switching to Thread 4160655360 (LWP 2528)]

Breakpoint 1, main (argc=1, argv=0xffacb9e4) at simple.c:23

23 int i, status = 0;

(gdb) info sharedlibrary

From To Syms Read Shared Object Library

0x0ffc1980 0x0ffd9af0 Yes /lib/ld.so.1

0x0fe14b40 0x0fe20a00 Yes /usr/lib/libspe.so.1

0x0fe5d340 0x0ff78e30 Yes /lib/libc.so.6

0x0fce47b0 0x0fcf1a40 Yes /lib/libpthread.so.0

0x0f291cc0 0x0f2970e0 Yes /lib/librt.so.1

(gdb) break simple_spu.c:5

No source file named simple_spu.c.

Make breakpoint pending on future shared library load? (y or [n]) y

Breakpoint 2 (simple_spu.c:5) pending.

(gdb) c

Continuing.

Breakpoint 3 at 0x158: file simple_spu.c, line 5.

Pending breakpoint "simple_spu.c:5" resolved

[New Thread 4160390384 (LWP 2531)]

[Switching to Thread 4160390384 (LWP 2531)]

Breakpoint 3, main (id=103079215104) at simple_spu.c:13

13 {

(gdb) info sharedlibrary

From To Syms Read Shared Object Library

0x0ffc1980 0x0ffd9af0 Yes /lib/ld.so.1

0x0fe14b40 0x0fe20a00 Yes /usr/lib/libspe.so.1

0x0fe5d340 0x0ff78e30 Yes /lib/libc.so.6

0x0fce47b0 0x0fcf1a40 Yes /lib/libpthread.so.0

0x0f291cc0 0x0f2970e0 Yes /lib/librt.so.1

0x00000028 0x00000860 Yes simple_spu@0x1801d00 <6>

(gdb)

GDB creates a unique name for each shared library entry representing SPE code. That name consists of the SPE executable name, followed by the location in PPE memory where the SPE is mapped (or embedded into the PPE executable image), and the SPE ID of the SPE thread where the code is loaded.

Scheduler-locking is a feature of GDB that simplifies multithread debugging by enabling you to control the behavior of multiple threads when you single-step through a thread. By default scheduler-locking is off, and this is the recommended setting.

In the default mode where scheduler-locking is off, single-stepping through one particular thread does not stop other threads of the application from running, but allows them to continue to execute. This applies to both threads executing on the PPE and on the SPE. This may not always be what you expect or want when debugging multithreaded applications, because those threads executing in the background may affect global application state asynchronously in ways that can make it difficult to reliably reproduce the problem you are debugging. If this is a concern, you can turn scheduler-locking on. In that mode, all other threads remain stopped while you are debugging one particular thread. A third option is to set scheduler-locking to step, which stops other threads while you are single-stepping the current thread, but lets them execute while the current thread is freely running.

However, if scheduler-locking is turned on, there is the potential for deadlocking where one or more threads cannot continue to run. Consider, for example, an application consisting of multiple SPE threads that communicate with each other through a mailbox. If you single-step one thread across an instruction that reads from the mailbox, and that mailbox happens to be empty at the moment, this instruction (and thus the debugging session) will block until another thread writes a message to the mailbox. However, if scheduler-locking is on, that other thread will remain stopped by the debugger because you are single-stepping. In this situation none of the threads can continue, and the whole program stalls indefinitely. This situation cannot occur when scheduler-locking is off, because in that case all other threads continue to run while the first thread is single-stepped. You should ensure that you enable scheduler-locking only for applications where such deadlocks cannot occur.

There are situations where you can safely set scheduler-locking on, but you should do so only when you are sure there are no deadlocks.

The syntax of the command is:

set scheduler-locking <mode>

where mode has one of the following values:

You can check the scheduler-locking mode with the following command:

show scheduler-locking

Generally speaking, you can use the same procedures to debug code for Cell BE as you would for PPC code. However, some existing features of GDB and one new command can help you to debug in the Cell BE processor multithreaded environment. These features are described below.

Breakpoints stop programs running when a certain location is reached. You set breakpoints with the break command, followed by the line number, function name, or exact address in the program.

You can use breakpoints for both PPE and SPE portions of the code. There are some instances, however, where GDB must defer insertion of a breakpoint because the code containing the breakpoint location has not yet been loaded into memory. This occurs when you wish to set the breakpoint for code that is dynamically loaded later in the program. If ppu-gdb cannot find the location of the breakpoint it sets the breakpoint to pending. When the code is loaded, the breakpoint is inserted and the pending breakpoint deleted.

You can use the set breakpoint command to control the behavior of GDB when it determines that the code for a breakpoint location is not loaded into memory. The syntax for this command is:

set breakpoint pending <on off auto>

where

Example: Pending breakpoints

The example below shows the use of pending breakpoints. Breakpoint 1 is a standard breakpoint set for simple.c, line 23. When the breakpoint is reached, the program stops running for debugging. After set breakpoint pending is set to off, GDB cannot set breakpoint 2 (break simple_spu.c:5) and generates the message No source file named simple_spu.c. After set breakpoint pending is changed to auto, GDB sets a pending breakpoint for the location simple_spu.c:5. At the point where GDB can resolve the location, it sets the next breakpoint, breakpoint 3.

(gdb) break main

Breakpoint 1 at 0x1801654: file simple.c, line 23.

(gdb) r

Starting program: /home/user/md/simple/simple

[Thread debugging using libthread_db enabled]

[New Thread 4160655360 (LWP 2651)]

[Switching to Thread 4160655360 (LWP 2651)]

Breakpoint 1, main (argc=1, argv=0xff95f9e4) at simple.c:23

23 int i, status = 0;

(gdb) off

(gdb) break simple_spu.c:5

No source file named simple_spu.c.

(gdb) set breakpoint pending auto

(gdb) break simple_spu.c:5

No source file named simple_spu.c.

Make breakpoint pending on future shared library load? (y or [n]) y

Breakpoint 2 (simple_spu.c:5) pending.

(gdb) c

Continuing.

Breakpoint 3 at 0x158: file simple_spu.c, line 5.

Pending breakpoint "simple_spu.c:5" resolved

[New Thread 4160390384 (LWP 2656)]

[Switching to Thread 4160390384 (LWP 2656)]

Breakpoint 3, main (id=103079215104) at simple_spu.c:13

13 {

(gdb)

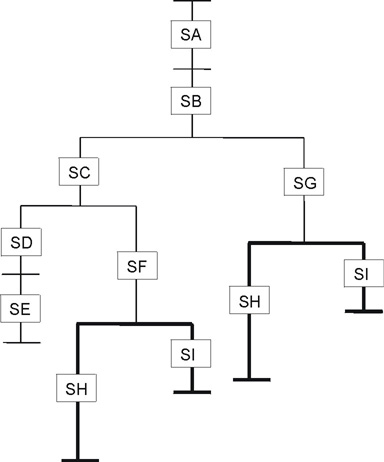

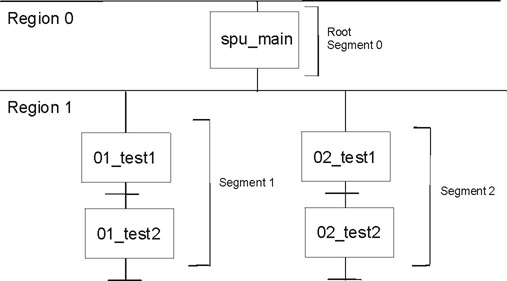

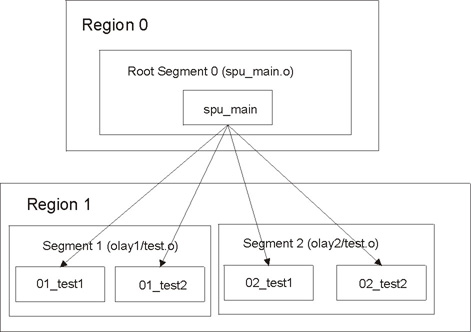

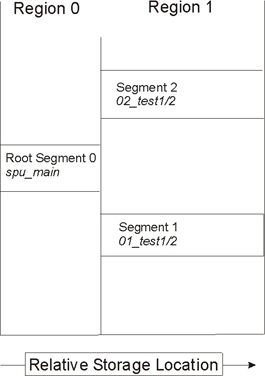

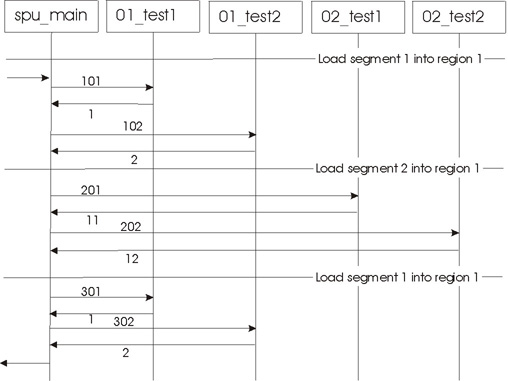

http://www.gnu.org/software/gdb/gdb.html